April 26, 2019, I was asked to present how Artificial Intelligence could help on the Battlefield during the officers day of the 11th Air mobile Brigade (11e Luchtmobiele brigade in Dutch) and the Defence Helicopter Command (DHC), together forming the 11th Air Assault Brigade of the Dutch forces [1]. The potential benefit of Artificial Intelligence on the battlefield is a very interesting, but also intriguing topic! This is why it made sense to me to write down a blog on this topic as well. In addition, should you be interested, the slides of my presentation at the 11th Airmobile Brigade can be found here.

Artificial Intelligence as the 3rd Weapons Revolution

Artificial Intelligence (AI) is seen as the 3rd weapons revolution: after the invention of gun powder and atomic weapons. Some governments fear for another “Sputnik” moment and don’t want to take the risk of lacking behind other nations. As a result, investments in AI are tremendous. China is in the lead, followed by the United States and several other countries.

Artificial Intelligence was Born on the Battlefield

We can confidently state that AI was born on the battlefield, starting with Alan Turing’s groundbreaking work to decipher the Enigma in 1942, followed by closed-in weapon systems (Phalanx in 1973). Other examples are autonomous defensive and assault weapons such as the Patriot anti-missile system, the C-RAM, Tomahawk, Exocet and JDAM, designed in the period from 1984-2006.

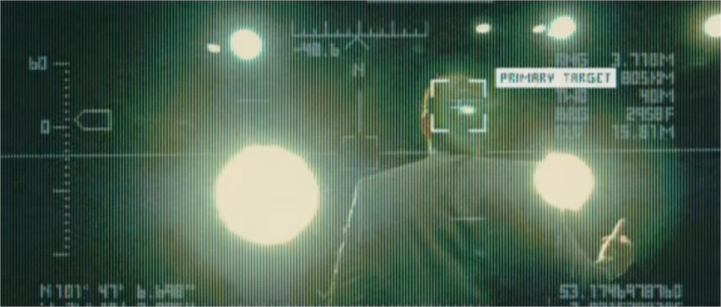

Intelligence & Reconnaissance

Recent breakthroughs of Artificial Intelligence have primarily been in intelligence & reconnaissance applications, especially in complex war theaters such as urbanized areas. As the majority of people live in such mega-cities, future conflicts will probably be as well. Assisting troops to detect potential threats in such complex war theatres is essential.

Using Artificial Intelligence to Scan Complex Battlefield Theatres

This is an expected area of success, as Artificial Intelligence algorithms are very good in image analysis, vision and other complex sensor analysis. But swarms of small drones (or artificial ants) may bring a new dimension to intelligence and reconnaissance missions as well.

Medical and Logistic Support

Other successful Artificial Intelligence pilots are implements to use robots for the rescue wounded soldiers, to bring supplies to front lines, exo-skeletons to give soldiers super-power, integrate battlefield information for command centers or run battlefield simulations to determine the best strategy.

A variety of autonomous vehicles, drones and vessels have seen the daylight.

Especially notable is the DARPA’s Legged Squad Support System that can carry goods up to 400 pounds of load in uneven territory.

Autonomous Weapons: Killer Robots and non-Proliferation

But recent developments in Artificial Intelligence seem to have opened the way towards the development of autonomous weapon. In February 2019, the U.S. Army asked experts for ideas on how to build a system that would allow tanks and other ground-combat vehicles to quickly and automatically “acquire, identify, and engage” targets, named ATLAS (Advanced Targeting and Lethality Automated System). According to Quartz magazine, the US military stated that “US Military: Our “Lethality Automated System” Definitely Isn’t a Killer Robot: the Army decided to revise its request for information to make it clear that the Advanced Targeting and Lethality Automated System (ATLAS) would not violate the Defense Department’s policy requiring that a human always make the decision to use lethal force.”

However, according the same article in Quartz, “Stuart Russell, a professor of computer science at UC Berkeley and a highly regarded AI expert, tells Quartz he is deeply concerned about the idea of tanks and other land-based fighting vehicles eventually having the capability to fire on their own. “It looks very much as if we are heading into an arms race where the current ban on full lethal autonomy”—a section of US military law that mandates some level of human interaction when actually making the decision to fire—”will be dropped as soon as it’s politically convenient to do so,” says Russell.

In 2017, Stuart Russell showed a dystopian future brought on by autonomous military weaponry that activists say would “decide who lives and dies, without further human intervention, which crosses a moral threshold.”

Given this controversy, it is not likely that we will see autonomous weapons soon, also because there is a lot of interest from groups such as The “Campaign to Stop Killer Robots” , a coalition of non-governmental organizations working to ban autonomous weapons and maintain “meaningful human control over the use of force”.

This resulted in the establishment of the International Committee for Robot Arms Control (ICRAC), a coalition of non-governmental organizations working to ban autonomous weapons and maintain “meaningful human control over the use of force”. There is also a lot of attention from the highest echelons of the United Nations in this subject. It could very well be that using such autonomous weapons against humans will soon be declared a war crime by the United Nations under new non-proliferation treaties.

But, that should not restrict nations to use autonomous AI weapons for defense and assault actions in cyber wars. I expect that the first Artificial Intelligence wars will most likely be fought in such virtual theaters using autonomous agents, developing autonomous strategies, if it not already has been.

Why is Artificial Intelligence suddenly so good?

Why is Artificial Intelligence considered to be the 3rd generation of weapons. Why is Artificial Intelligence suddenly so good? The answer to these questions lies in the fact that computers using artificial intelligence approach problems differently than humans. This is because computers have a different skill set than humans.

“the question whether a computer can think is just as relevant as the questions if a submarine can swim”

In the early 1950’s, Edsger Dijkstra, a famous Dutch computer scientists stated that “the question whether a computer can think is just as relevant as the questions if a submarine can swim”. Fish swim, a submarine does not, but it is the most powerful weapon in our oceans.

Looking at computers that run Artificial Intelligence programs, they excel in speed, scalability, availability, and memory. Robots in strength. Sensory systems in accuracy and sensitivity. Using these specific skills allows computers to solve problems in their own way. In addition, a computer program can try something 100 millions of times in a fraction of the time humans can. By doing so, the computer program can fine tune all parameters to find an optimal solution for a particular problem. Moreover, computer programs can use data structures representing 100 millions of features to find detailed patterns. As a result computers can “see” and “hear” things humans cannot.

For all the above reasons, Artificial Intelligence approaches language, vision, listening, and movement different and often better than humans. All this makes weapons using Artificial Intelligence so powerful.

Such weapons can be robots, vehicles, vessels or drones, but they may just as well have a virtual presence. Think of computer programs residing in networks. Therefore, the Artificial Intelligence community prefers to talk about Autonomous Agents instead of robots. Such autonomous agents can be any intelligent agent operating on an owner’s behalf but without any interference of that ownership entity.

These autonomous agents have been very successful recently. Not just their capabilities to solve problems, but also their ability to learn automatically.

Exponential Growth of Technology

The exponential growth of hardware and software developments has led to the recent breakthroughs in Artificial Intelligence. Starting with computers programmed explicitly by humans that mimics intelligent behavior such as IBM’s Deep Blue in 1997 which defeated Kasparov in chess. Next was Watson in 2007 winning the game of Jeopardy (which basically was a very fast and smart text-based retrieval system). However, most impressive was the revolutionary breakthroughs of AlphaGo and AlphaGoZero in 2016 and 2017: these programs tought themselves to play Go and Chess better than any other human or computer and even showed signs of creativity. The programs were initiated with just a limited amount of knowledge and used deep (reinforcement) learning to teach themselves how to play better by playing 10’s of millions of games against itself on Googles huge computer network.

There a no signs that this exponential growth will stop soon. Actually, other, even more spectacular technologies such as quantum computing are waiting around the corner for their breakthrough.

Artificial Intelligence can be Ruthless

As explained before, autonomous agents can use Artificial Intelligence techniques, such as reinforcement learning, to develop new strategies. Google did an experiment with a very innocent game named “Fruit Picking”. In this game, participants need to catch as many falling pieces of fruit as possible. The one catching most wins. In a large simulation, Google hid a few laser guns in the orchard. After many simulations, the program found out that the most efficient strategy was to pick up the guns first, shoot all other participants, and then start using the baskets to catch the falling fruit. Computers do not have any moral or ethical constraints; they are ruthless in their quest for optimization. We have to ask ourselves where we will set limits to such programs to make them comply to our moral ground rules. How to do this in a military context is also food for thought.

Strength and Weakness of Artificial Intelligence

Back to our discussion: what are realistic deployments of Artificial Intelligence on the battlefield. Where can Artificial Intelligence help us and where not?

For that answer, we have to look at the today’s strengths and weaknesses of Artificial Intelligence. The strengths obviously are: memory, speed, force, accuracy, and sensory (vision, audio, heat, infrared, …) processing. The weaknesses are lack of judgement and intuition, lack of general and commonsense knowledge, inability to deal with uncertainty and unexpected behavior and lack of creativity.

According to Alexander Kott, Chief of the Network Science Division of the US Army Research Lab, there are many battlefield challenges we have not solved yet due to these restrictions:

- “No plan survives first contact with enemy”

- On the battlefield we have to deal with “Dinky, Dirty, Dynamic and Deceptive Data”

- Computers break down, have bugs, malfunction, need repair, maintenance and energy.

- Malware, viruses, electro-magnetical interference, are just a few of the threats the enemy can use against such autonomous systems.

Artificial Intelligence: Today and in a few years …

Looking at today’s state-of-the-art in Artificial Intelligence, in my opinion we can expect useful and reliable contributions in the fields of:

- Sensor fusion for reconnaissance & intelligence

- Simulations & training

- Planning

- Exo-skeletons

- Medical & logistical support

- Guided weapons

- Precision targeting

- Missile defense

- Cyber security

But we will most likely still have to wait for more advanced applications such as:

- Omnipresent and Omniscient autonomous vehicles, vessels or drones

- Autonomous intelligence and reconnaissance missions

- Automatically “acquire, identify, and engage” targets

- Strategic decisions: big-data-driven modeling, simulation, and war gaming

- Artificial Intelligence guided cyber attacks

How long, I am not sure of, but given the “Manhattan project” type of investments that are made in Artificial Intelligence, 5-10 years should be a realistic time frame.

How About Policy Rules?

We also have to ask ourselves how we should adjust our current policy rules when using Artificial Intelligence on the battlefield. Currently, three different policy rules are distinguished by the military:

- The human in the loop: Artificial Intelligence program, robot or drone selects and aims, human shoots.

- The human on the loop: missiles are activated and go. Humans can push a cancel button.

- The human off the loop: this is a dangerous kind of AI on the battlefield, obviously we already have such weapons, called mines and they cause many innocent deaths, also after a battlefield conflict. The last thing we should wish for are wondering (autonomous) robots killing citizens long after a war.

But, most warrying are what is called a robot beyond the loop. This is the scenario when autonomous agents start developing their own policy rules. Then we can either end up with a Skynet or War Games scenario. Almost everybody agrees that this should be avoided at all times!

Good Artificial Intelligence will build in controls to address the concerns discussed here. It is the bad Artificial Intelligence that we should be worried about!

But how about Robot Rights?

Well, to make this overview complete: next to the human rights, there are also organization worried about rights of robots. Most known is the American Society for the Prevention of Cruelty to Robots (ASPCR) who are defending robot rights (http://www.aspcr.com/). And ASPCR is not the only organization worried about Robot Rights. In 2016, the European Parliament’s Committee on Legal Affairs released a draft report and motion calling for a set of civil law rules on robotics and “electronic persons.” This is also an interesting development making the movie “Blade Runner” potentially a reality.

Where are we now?

IEEE Spectrum compiled a great video on robots Falling Down at the DARPA Robotics Challenge:

For now, let’s laugh about these silly robots for the (short) time left that we still can and enjoy the fact that we are still better and smarter than them!

References

Here are a few additional references, should you wish to read more on developments, applications, expectations and opinions of Artificial Intelligence on the Battlefield”.

Alexander Kott, Ethan Stump. Intelligent Autonomous Things on the Battlefield. Submitted on 26 Feb 2019. https://arxiv.org/abs/1902.10086.

L. Cummings, International Security Department and US and the Americas Programme, January 2017. Artificial Intelligence and the Future of Warfare. https://www.chathamhouse.org/sites/default/files/publications/research/2017-01-26-artificial-intelligence-future-warfare-cummings-final.pdf.

Zachary S. Davis. ARTIFICIAL INTELLIGENCE ON THE BATTLEFIELD. An Initial Survey of Potential Implications for Deterrence, Stability, and Strategic Surprise. Center for Global Security Research Lawrence Livermore National Laboratory, March 2019. https://cgsr.llnl.gov/content/assets/docs/CGSR-AI_BattlefieldWEB.pdf

Robert K. Ackerman. AI will Reboot the Army’s Battlefield. Signal, AFCEA. September 1, 2018. https://www.afcea.org/content/ai-will-reboot-armys-battlefield

Daniel Cebul. 4 ways AI can let humans down on the battlefield. C4ISRNET. June 20, 2018. https://www.c4isrnet.com/it-networks/2018/06/20/4-ways-ai-can-let-humans-down-on-the-battlefield/

Chris Middleton. Cue weaponised A.I. as autonomous UK tech shines on battlefield. Internet of Business. September 24, 2018. https://internetofbusiness.com/cue-weaponised-a-i-as-autonomous-uk-tech-shines-on-battlefield/.

Ryan Daws, Britain successfully trials AI in battlefield scanning experiment. AI News, September 24, 2018. https://www.artificialintelligence-news.com/2018/09/24/britain-trials-ai-battlefield-experiment/

Mady Delvaux. DRAFT REPORT with recommendations to the Commission on Civil Law Rules on Robotics. European Parliament. http://www.europarl.europa.eu/sides/getDoc.do?pubRef=-//EP//NONSGML+COMPARL+PE-582.443+01+DOC+PDF+V0//EN&language=EN

Elsa B. Kania . Center for a New American Security. November 28, 2017. Battlefield Singularity: Artificial Intelligence, Military Revolution, and China’s Future Military Power. https://www.cnas.org/publications/reports/battlefield-singularity-artificial-intelligence-military-revolution-and-chinas-future-military-power

Simon Alvarez. AI is accelerating the improvement of US military’s battlefield robots. May 9, 2018. https://www.teslarati.com/ai-machine-learning-battlefield-robots-us-military/

Adam Wunische. AI Weapons are here to stay. August 5, 2018. The National Interest. https://nationalinterest.org/feature/ai-weapons-are-here-stay-27862.

Asha Mclean. Robots in the battlefield: Georgia Tech professor thinks AI can play a vital role. September 19, 2018. ZDNet. https://www.zdnet.com/article/robots-in-the-battlefield-georgia-tech-professor-thinks-ai-can-play-a-vital-role/

Adrien Book. 5 Ways Artificial Intelligence Will Forever Change How We View The Battlefield. The Role of A.I in War. Hackernoon. Jun 11, 2018. https://hackernoon.com/5-ways-artificial-intelligence-will-forever-change-how-we-view-the-battlefield-b6df23c0ed2

Amir Husain. AI On The Battlefield: A Framework For Ethical Autonomy. Forbes Magazine. Nov 28, 2016. https://www.forbes.com/sites/forbestechcouncil/2016/11/28/ai-on-the-battlefield-a-framework-for-ethical-autonomy/#3d628a5cf25c.

Justin Rohrlich. The US Army wants to turn tanks into AI-powered killing machines. Quartz Magazine. February 26, 2019, https://qz.com/1558841/us-army-developing-ai-powered-autonomous-weapons/

AI Researchers Should Not Retreat From Battlefield Robots, They Should Engage Them Head-On. IFL Science. https://www.iflscience.com/technology/ai-researchers-should-not-retreat-battlefield-robots-they-should-engage-them-head/

The Future of AI on the Battlefield. Mad Scientist Laboratory. October 10, 2018. https://www.afcea.org/content/ai-will-reboot-armys-battlefield

Defense One. AI, Autonomy and the Future of the Battlefield. February 2017, eBook. https://www.defenseone.com/assets/ai-autonomy-future-battlefield/portal/.

Falling Down at the DARPA Robotics Challenge: https://www.youtube.com/watch?v=g0TaYhjpOfo&feature=youtu.be.

The American Society for the Prevention of Cruelty to Robots, ASPCR Robot Rights. http://www.aspcr.com/

[1] The 11th Airmobile Brigade is a rapid and light infantry unit that can be deployed anywhere on the globe within five to twenty days to defend its own or allied territory, protect the international rule of law and support law enforcement, disaster relief and humanitarian aid. Deployment can be as part of NATO or the United Nations.

I feel appreciative that I read this. It is useful and extremely educational and I truly took in a ton from it.

data scientist course

LikeLike